AI deposition summaries are being sold beside sworn transcripts, raising a question older than the technology itself: when interpretation travels with the record, does neutrality follow it? Advisory Opinion 32 was written to protect public confidence in the reporter’s role, not to regulate keyboards. Replacing a human summarizer with software may change the tool, but it does not automatically eliminate the appearance concerns the rule was meant to prevent.

Tag Archives: ProfessionalResponsibility

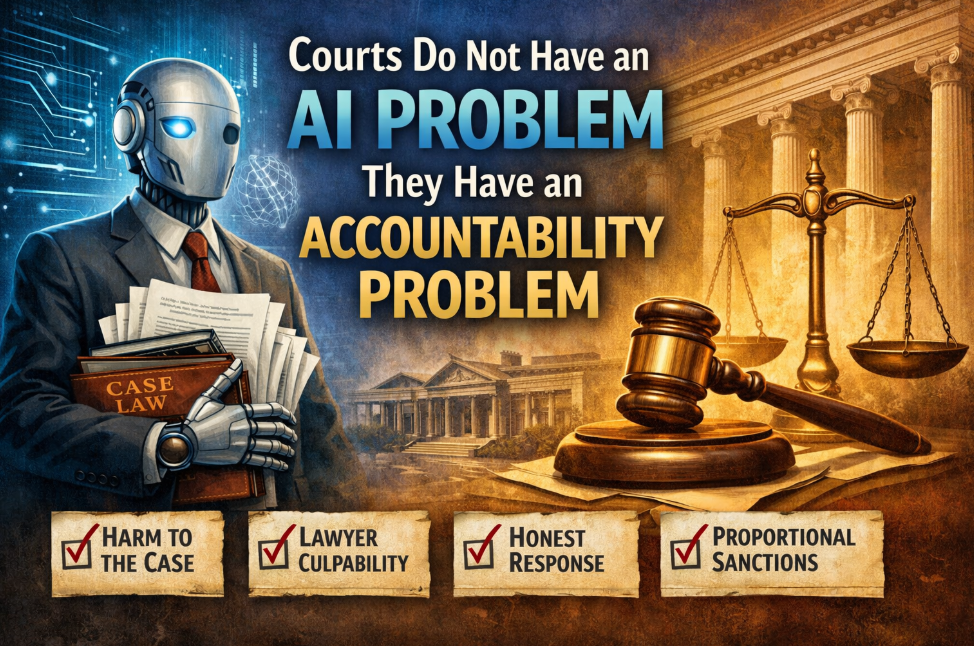

Courts Do Not Have an AI Problem. They Have a Record-Keeping and Accountability Problem.

Courts do not face an artificial intelligence crisis so much as a crisis of accountability. AI-related errors expose gaps in supervision, verification, and professional responsibility, not rogue technology. Judicial legitimacy is not threatened by tools, but by inconsistent governance. The question before the courts is not whether AI will be used, but whether responsibility will remain clearly human.

When the Machine Gets It Wrong, Who Pays the Price?

Courts have been clear: artificial intelligence may assist lawyers, but it does not absolve them. When ASR systems miss testimony or AI summaries omit critical facts, responsibility does not vanish into the software. It lands squarely on the professionals who relied on it. As automation reshapes the legal record, a new reckoning over accountability is quietly approaching.

Court Reporters, Technology, and Reality – Resetting Expectations in a Small Industry

Court reporting technology vendors are not Big Tech. They are small, specialized companies serving a shrinking professional market. Expecting instant, round-the-clock concierge support misunderstands the realities of the industry. Professional competence requires patience, self-sufficiency, and deep knowledge of one’s tools. When a reporter’s livelihood depends entirely on immediate vendor intervention, the risk is not poor service—it is misplaced dependency.

When the Machine Gets It Wrong, the Court Still Blames the Human

Courts across the country are delivering a blunt verdict on artificial intelligence: speed does not excuse accuracy. As lawyers face sanctions for AI-generated errors, judges are reaffirming an old rule in a new era—accountability remains human. In an age of automation, the certified court record and the professionals who create it have never mattered more.

Who Trained the Machine?

AutoScript AI is marketed as a “legal-grade” AI transcription solution trained on “millions of hours of verified proceedings,” yet the company provides no public definition of what verification means in a legal context or where that data originated. Founded and led by technology executive Rene Arvin, the platform reflects a broader trend of general ASR tools being rebranded for legal use without the transparency traditionally required in court reporting.