The Heppner decision reframes the court-reporting debate. The issue is no longer speed or convenience but legal accountability. Courts protect communications only when a human professional bears fiduciary responsibility. Autonomous recording systems cannot testify, explain decisions, or hold privilege. When the record lacks a sworn custodian, attorneys inherit the risk. The question is simple: if the transcript is challenged, who can take the stand?

Tag Archives: LitigationRisk

Why the AI Privilege Fight Could Decide the Future of Court Reporting

A federal court has drawn a stark line: conversations with AI systems are not privileged. That conclusion reaches far beyond chatbots. Digital recordings, automated deposition summaries, and cloud transcript analytics may transform confidential litigation strategy into discoverable material. The issue is no longer convenience versus tradition — it is custody versus disclosure. When legal data leaves human control, the record itself may become evidence.

When an AI “Note-Taker” Shows Up to a Legal Proceeding

AI note-taking tools may be convenient in business meetings, but their presence in legal proceedings raises serious concerns about confidentiality, chain of custody, and record integrity. When unauthorized bots capture testimony, the official record can be compromised in ways that surface long after the proceeding ends. Protecting the record means understanding when technology crosses a legal line.

When the Machine Gets It Wrong, Who Pays the Price?

Courts have been clear: artificial intelligence may assist lawyers, but it does not absolve them. When ASR systems miss testimony or AI summaries omit critical facts, responsibility does not vanish into the software. It lands squarely on the professionals who relied on it. As automation reshapes the legal record, a new reckoning over accountability is quietly approaching.

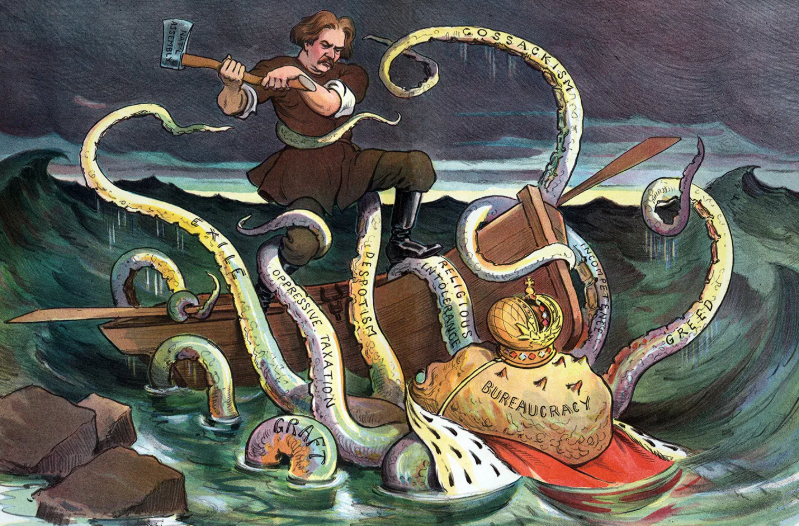

Vendors Are Not Officers of the Court

Court reporting agencies schedule proceedings and process invoices—but they do not create the legal record. Yet some national agencies are now attaching corporate “company certificates” to deposition transcripts they did not take and cannot lawfully certify. This quiet shift blurs statutory boundaries, risks inadmissibility, and threatens due process by substituting branding for licensure in the creation of sworn testimony.

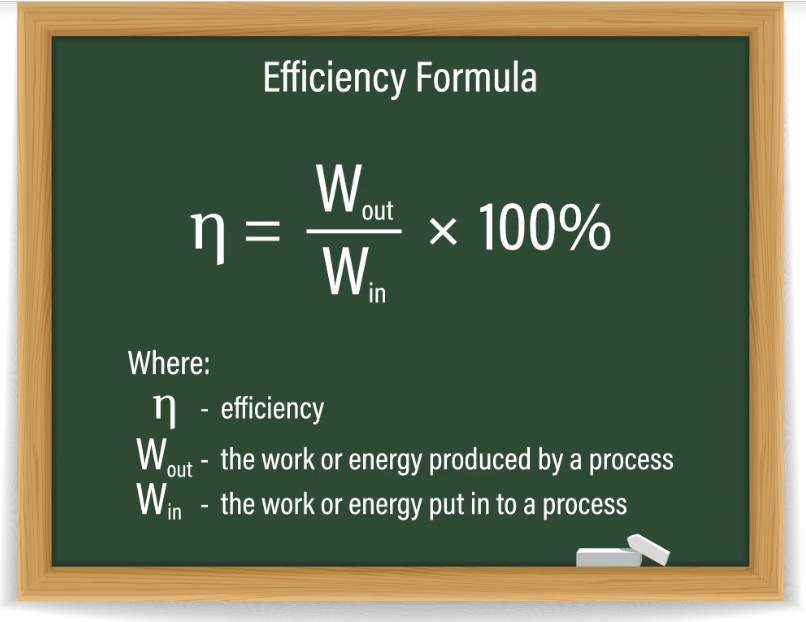

When Efficiency Overrides the Law – Why Badran v. Badran Got Admissibility Wrong

The Badran v. Badran ruling did not affirm professionalism in modern depositions; it excused its absence. Admissibility does not turn on convenience, volume, or after-the-fact agreement. It turns on lawful process and qualified human oversight. Agencies are not officers of the record, and parties cannot stipulate away licensure, evidentiary foundation, or due process in the name of efficiency.

When Practice Drifts From the Code – How Informal Norms Are Reshaping the Courtroom Record

In courtrooms nationwide, a quiet shift is underway. The rules governing the official record remain unchanged, yet everyday practice has drifted from the code. Realtime feeds and rough drafts, once tools for preparation, are increasingly treated as authoritative sources in high-stakes moments. This slow normalization of informality carries real legal risk—for attorneys, judges, and especially the reporters entrusted with preserving the record.

When Capital Moves Faster Than the Courts – AI, Evidence, and the Next Legal Reckoning

As venture capital floods legal technology, artificial intelligence is being woven into the heart of litigation—often faster than courts, ethics rules, or evidentiary standards can respond. Tools that summarize testimony or generate chronologies promise efficiency, but raise unresolved questions about reliability, consent, and admissibility. History shows that when automation outpaces scrutiny, courts eventually intervene—sometimes after irreversible damage has already been done.

Who Trained the Machine?

AutoScript AI is marketed as a “legal-grade” AI transcription solution trained on “millions of hours of verified proceedings,” yet the company provides no public definition of what verification means in a legal context or where that data originated. Founded and led by technology executive Rene Arvin, the platform reflects a broader trend of general ASR tools being rebranded for legal use without the transparency traditionally required in court reporting.

AI Summaries in Litigation – Efficiency or a Lawsuit Waiting to Happen?

An AI-generated deposition summary missed a crucial medical statement about future surgery, leading an insurance company to undervalue a case—and a jury later awarded millions over policy limits. Now the question is: Who’s liable? The law firm? The AI vendor? Or the court reporting agency that sold the product? As AI floods legal workflows, expect a wave of litigation over errors that never should’ve been automated.