Courts across the country are quietly launching in-house voice-writing programs, training clerks and court staff to become certified reporters. Framed as a solution to shortages, these initiatives shift education inside the institution itself. But when courts become the classroom, deeper questions emerge about independence, professional standards, and who ultimately controls the creation of the legal record.

Tag Archives: CourtroomTechnology

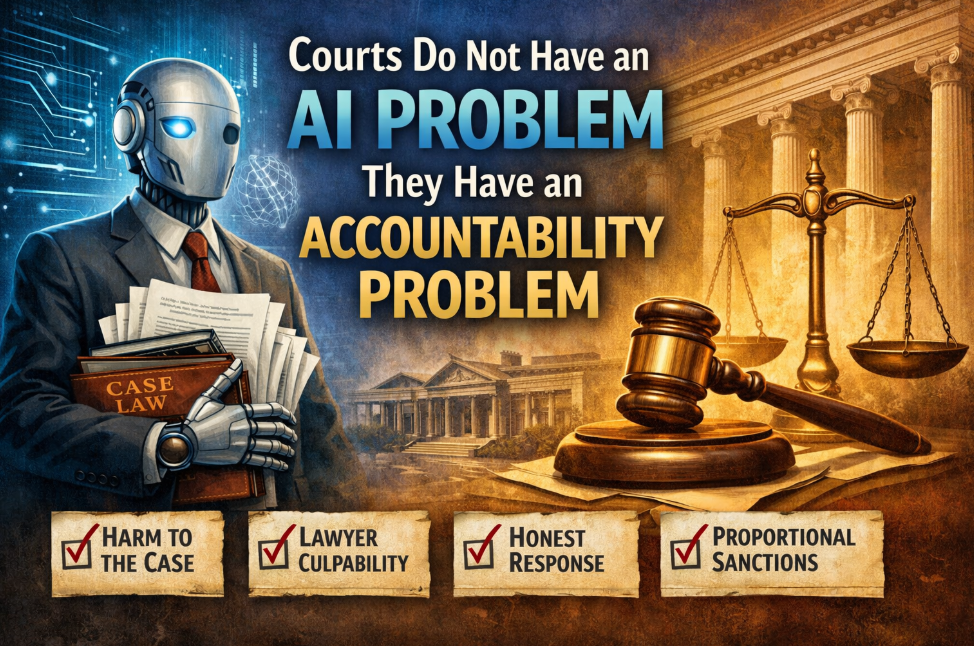

Courts Do Not Have an AI Problem. They Have a Record-Keeping and Accountability Problem.

Courts do not face an artificial intelligence crisis so much as a crisis of accountability. AI-related errors expose gaps in supervision, verification, and professional responsibility, not rogue technology. Judicial legitimacy is not threatened by tools, but by inconsistent governance. The question before the courts is not whether AI will be used, but whether responsibility will remain clearly human.

When Machines Become Witnesses – Why the Federal Judiciary’s AI Evidence Proposal Quietly Reinforces the Role of Court Reporters

The federal judiciary’s proposed rule on AI-generated evidence quietly draws a critical line: machine output is not inherently trustworthy and must be tested like expert testimony. That distinction reinforces the structural role of court reporters. A certified transcript is a human-governed legal record, not algorithmic evidence. Once the human layer disappears, the court record itself becomes something the law now admits is dangerous.

Why Judges Shouldn’t Rely on AI Yet – A Cautionary Case Against Generative AI in the Courts

As courts experiment with generative AI, the judiciary risks embracing a technology that is not yet reliable, transparent, or safe enough for justice. From hallucinated legal authority to inaccurate ASR records, today’s AI systems already struggle with basic courtroom functions. Introducing them into judicial workflows now risks compromising confidentiality, fairness, and public trust at the very moment the courts can least afford it.

When the Courtroom Becomes a Dataset – Why Media Recording in 2026 Is No Longer Just “Coverage”

Courtroom recording is no longer simply about cameras and coverage. In 2026, it is about what happens after the audio leaves the room: automated transcription, cloud storage, permanent datasets, and uncontrolled reuse. When proceedings become machine-readable assets, courts risk losing authority over the official record, participant privacy, and the conditions necessary for fair, orderly justice.

The Readback Problem in Voice Writing—and How to Solve It

Readback is where the record proves its reliability. For voice writers, that moment too often collapses into rewind and guesswork when ASR fails. The solution is not better training, but better software: a persistent phonetic fallback, confidence-aware output, and word-level audio that function like steno notes. Voice does not need perfection—it needs an inspectable substrate.

The Voice Writing Question – Is the Fastest Entry Path Quietly Reshaping—and Risking—the Court Reporting Profession?

Voice writing is rapidly being marketed as the fastest path into court reporting, even as it remains unrecognized as stenography by the profession’s own national association. This article examines the growing disconnect between how voice writing is sold and how the legal record actually functions, why many machine reporters are learning voice for longevity—not superiority—and what happens when speed of entry outpaces experience in a profession built on precision.

When Speed Replaces the Record – What “FTR Now” Reveals About the Future of Court Transcription

A new legal tech product promises “searchable transcripts” from courtroom audio in minutes, built in just two days and priced at seven dollars an hour. But speed and convenience come at a cost. When automated transcription is mistaken for the official record, accuracy, accountability, and due process are quietly put at risk—often before attorneys realize the distinction matters.